Goals and Background

This lab will entail the following:1. Delineating a study area from a larger satellite scene

2. Demonstrating how spatial resolution of images can be optimized for visual purposes

3. Introducing radiometric enhancement techniques

4. Linking Erdas viewers to Google Earth

5. Resampling images using nearest neighbor and bilinear interpolation

6. Exploring image mosaicking

7. Exercising binary change detection

These are seven basic tasks which all aid in visual interpretation of images.

Methods

Part 1: Delineating a Study Area From a Larger Satellite Scene |

| Fig 1.0: Using an Inquire Box to Determine a Study Area |

The second way to identify an subset is to use a shapefile. To do this, one first brings in the shapefiles which contain the boundaries of the wanted study/subset area from the file menu. Then, the user highlights the the shapefile and saves it as an AOI. Then, to make the subset from this AOI, the user once again navigates to Raster → Subset & Chip → Create Subset Image, but this time, instead of clicking on From Inquire Box, the user clicks on AOI at the bottom of the Subset window and clicks OK to create the subset.

Part 2: Demonstrating how Spatial Resolution of Images can be Optimized for Visual Purposes

To create a higher spatial resolution image, one first needs two images of different different spatial resolutions. First, one uses the Resolution Merge function by navigating to Raster →Pan Sharpen→Resolution Merge. Once the tool is opened, then one inputs the higher resolution image, and then inputs the multi-spectral image which has a coarser resolution and saves the output. A couple of settings had to be changed before running the tool; the method was set to Multiplicative and the resampling technique was to Nearest Neighbor. After running the tool, a pan-sharpened image is generated with the same spatial resolution as the input image with the higher spatial resolution.

Part 3: Introducing Radiometric Enhancement Techniques

For this, haze reduction was used as a radiometric enhancement technique. To reduce haze in a scene, one navigates to Raster →Radiometric→Haze Reduction. This will bring up the Haze Reduction window where an image is inputted to reduce the haze. The output file is then saved in a separate folder.

Part 4: Linking Erdas viewers to Google Earth

An Erdas viewer was then linked to Google Earth. This is done by first inserting a scene into a viewer and then clicking on Connect to Google Earth from the File tab. Then, to link the views of the scene and google earth, the Link GE to View and Sync GE to View buttons were used. When a viewer is linked with Google Earth, its doesn't display the same spectral bands as the Erdas viewer does. Instead, Google Earth displays aerial photography with labels which can be used as a selective image interpretation key to aid in the visual interpretation of the scene in the Erdas viewer.

Part 5: Resampling Images Using Nearest Neighbor and Bilinear Interpolation

There are three resampling methods: nearest neighbor, bilinear interpolation, and cubic convolution. In this lab, only the nearest neighbor and bilinear interpolation methods are exercised. There are two ways to resample an image: resample up and resample down. Up or down refers to which relative direction the output file size and pixels are going. If one is making the pixels smaller, this increases the file size which is resampling up, and if one is making the pixels larger, the output file size will be smaller which is resampling down.

To resample an image in Erdas, one navigates to Raster→Spatial→Resample Pixel Size. From there once can change the output pixel size based on the input image. In this case, the pixel size is changed from 30 x 30 meters to 15 x 15 meters with the input image being a scene over Eau Claire taken 2011. This resampling is done twice. In both cases, the image is resampled up from 30 x 30 meters to 15 x 15 meters and the Square Cells check box is checked. However, in the first case the Nearest Neighbor method is used, and in the second case the Bilinear Interpolation method is used. This creates two separate resampled images.

Part 6: Exploring Image Mosaicking

Image mosaicking is the process of stacking/fusing multiple Erdas scenes together to create a single image. There are two main ways of mosaicking in Erdas.The first is by using Mosaic Express and the second is by using MosaicPro. To create a mosaic, one must first bring in the scenes wanted to be included in the mosaic in the viewer. However, before brining in each image the Multiple Images in Virtual Mosaic radio button must checked under the Multiple tab in the Select Layer to Add: window. In this lab, one mosaic will be created using each method and each mosaic will consist of two input scenes.

Then, to create the mosaic using Mosaic Express, one navigates to Raster→Mosaic→Mosaic Express. In the Mosaic Express window, the two scenes in the viewer are added in the input tab. Then, all of the defaults are accepted and the mosaic is created.

To create the mosaic using MosaicPro one navigates to Raster→Mosaic→MosaicPro and then enters some input about the image to create a more seamless output mosaic. In tool, the compute active area radio button is enabled first before inserting each image, the order of the images is set so that the image with the better quality is on top, the Use Histogram Matching option is selected to enable color corrections, the overlap areas are set so they can be used in the histogram matching, and the default settings are accepted for the Set Output Options Dialog and Set Overlap Function. Then, the tool is ready to run. By entering in more information about the images, a higher quality mosaic is generated.

Part 7: Exercising Binary Change Detection

Binary change detection is used to see what parts of an area have changed over a certain period of time. This is done by looking at the brightness values of pixels between two images taken over the same area on different dates. For this lab, the images used have an extent over west central Wisconsin. One image was taken in 1991 and the other was taken in 2011.

|

| Fig 1.1: Two Input Operators Window |

This image gave all of the difference values for which the pixels changed. But, because the author is interested in seeing which pixel values changed substantially, two thresholds were set using the equations [(the mean of the difference image + 1.5 * the standard deviation of the difference image) + the value located at the center of the histogram] for the upper threshold and [-1 * (the mean of the difference image + 1.5 * the standard deviation of the difference image) + the value located at the center of the histogram] for the lower threshold to identify which pixels changed significantly. Any pixels that had a brightness value above the upper threshold or below the threshold were to have considered to change substantially. The mean of the difference image was 12.253 the standard deviation was 23.946, and the value located at the center of the histogram was 23.6631. Using these values and the two equations above a lower threshold value of -24.5089 and an upper threshold value of 71.8351 are calculated.

Next, the spatial modeler is used to recreate a difference image which will not contain negative values such as done above. This is done by first opening the Model Maker tool by navigating to Toolbox→Model Maker→ Model Maker and then inserting band 4 of the 2011 and 1991 images as raster objects into the model. Then, a tool is used to connect the two images to an output raster object. Next, the tool was configured as an expression to create a difference raster. The expression used was (the 2011 image - the 1991 image + 127) the number 127 was used as a constant because -127 is the lowest difference value possible because the maximum brightness values in both images is 255.

|

| Fig 1.2: Either Or If Expression |

Results

Figure 1.3 shows the results of using the inquire box explained in part one to identify a study area, and figure 1.4 shows the result of using a shapefile to identify a study area or AOI. Figure 1.3 show a rectangular image which can be useful in some instances, but often a study area is not rectangular shaped. This is why using shapefile to create an AOI, as shown in figure 1.4 is usually a better option.

|

| Fig 1.3: Determining a Study Area From a Subset |

|

| Fig 1.4: Determining an AOI From a Shapefile |

Figure 1.5 shows the results of pansharpening an image. Both images are zoomed in so that the differences can be seen. The original panchromatic image is displayed on the left, and the pansharpend image is displayed on the right. What is not showed is the third image which allowed for the pansharpening to occur. The pansharpening makes the image much clearer as the spatial resolution is increased from 30 m to 15 m. Increasing the spatial resolution of an image aid in interpreting imagery.

|

| Fig 1.5: Pansharpend Image vs not Pansharpend Image |

Figure 1.6 shows the results of part 3, haze reduction. Once again, both images are zoomed in so that the differences between them can be seen. The original image is displayed on the left, and the haze reduced image is displayed on the right. When comparing the two images, the haze reduced image seems to have brighter colors, and the difference or contrast between pixels seems greater to the naked eye. Haze reduction is one way to aid in visual image interpretation.

|

| Fig 1.6: Haze Reduced Image vs Non Haze Reduced Image |

Figure 1.7 shows the result of part 5 through displaying the two resampled images and the original image. All three images are zoomed to the same extent to that the differences between them can be seen. The image on the left is the original image which has a pixel size of 30 m, the image in the middle was resampled with the nearest neighbor technique to 15 m pixel size, and the on the right was resampled with the bilinear interpolation technique to 15 m pixel size. The original image seems to display a stair stepped effect of the pixels when compared to the resampled up images.

|

| Fig 1.7: Resampling Methods Results |

Figures 1.8 and 1.9 show the results of creating the mosaics in part 6. Figure 1.8 displays the mosaic generated by using Mosaic Express and figure 1.9 displays the mosaic generated using MosaicPro.

The MosaicPro mosaic is much smoother than compared to the Mosaic Express output. The differences between the scenes is much more subtle and the colors match much more.

|

| Fig 1.8: Mosaic Express Output |

|

| Fig 1.9: MosaicPro Output |

|

| Fig 1.10: Histogram Change Threshold |

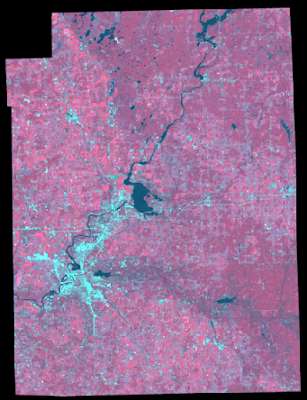

Figure 1.11 shows the map created in ArcMap of the changed areas in west central Wisconsin between 1991 and 2011. The data for this map was created from using the model in part 7. Most of the areas that have changed are located away from urban centers such as Eau Claire and Chippewa Falls and away from water bodies such as the Chippewa River.

|

| Fig 1.11: Areas that Have Changed Between 1991 and 2011 in West Central Wisconsin |

Sources Cryil Wilson, Miscellaneous Image Functions, 2017 https://drive.google.com/file/d/0B1RJN5un8yU8dGdsanpidExKYlk/view?usp=sharing

Maribeth Price,Mastering ArcGIS 6th Edition, McGraw Hill. 2014

No comments:

Post a Comment